Optical Low Pass Filter Study

To better understand optically exactly what the Anti Aliasing (AA) / Blur / Optical Low Pass Filter (OLPF) does, we studied the actual OLPF on a Canon 5D MK II. AA/Blur/OLPF are all names for the same thing. To get close enough to take a picture of the Color Filter Array (CFA), or Bayer pattern, of a sensor, you need to be able to take the sensor coverglass off. For our monochrome conversion, we have to remove the sensor coverglass and, in the course of our R&D, we have a collection of dead sensors. Since we happen to have a Canon 5D MK II sensor without the coverglass and with the CFA, we can get some pictures of the actual CFA and make measurements. Since we can see at the camera pixel level, we can also see the effects of the OLPF.

We took a series of pictures using a 10 micron glass scale, the sensor and the OLPF. We took one picture of the glass scale, one picture of the scale + the OLPF and another picture of the actual sensor. By taking a picture of the scale with the OLPF, we can easily see the blur and quantify the amount of blur introduced.

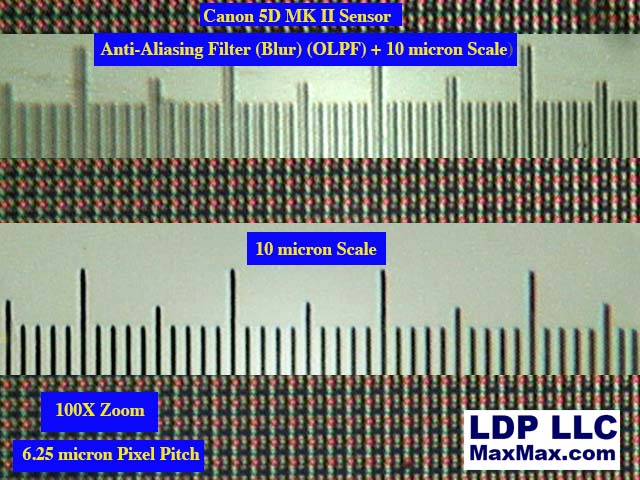

At 100X zoom:

The top scale in the picture shows how the scale looks when viewed through the OLPF. You can see that the hatch lines have been displaced to the left. The bottom scale shows the scale with no OLPF. In the background, you can see the sensor Bayer Filter or Color Filter Array (CFA).

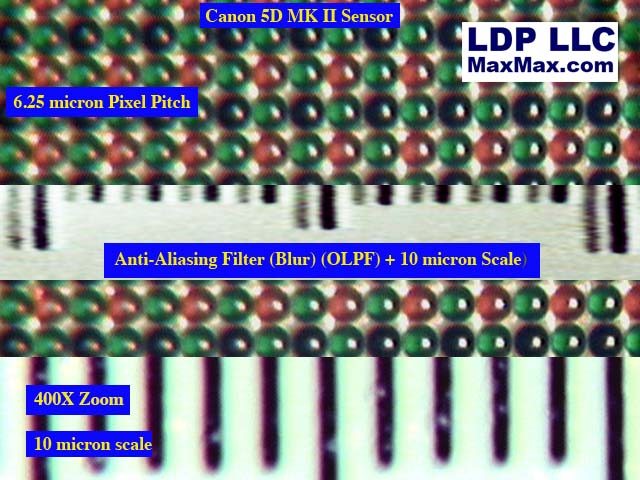

At 400X zoom:

In the 400X zoom pictire, you can better see the CFA, the amount of blur and the 10 micron scale. For the Canon 5D II sensor, it appears that they displace the image approximately by one pixel. The complete OLPF has two layers. These pictures show 1 layer or 1/2 of the blur filter. The 2nd part blurs the image 1 pixel in the vertical direction. This means that for any one point of light, you end up with 4 points separated by 1 pixel or the same size as one R-G-G-B CFA square. You have 4 points because the 1st layer gives you 2 points, and then the 2nd layer doubles those to 4 points.

For another camera, the manufacturer might choose to displace the light differently. For many 4/3 cameras, we see more blur than for APS and full frame sensors. Sometimes manufacturers make odd choices in the amount of blur. For example, The APS Nikon D70 sensor had much less physical blur than the APS Nikon D200 sensors despite the D70 having a pixel pitch of 7.8 microns and the D200 having a pixel pitch of 5.8 microns.

OLPFs are typically made from Lithium Niobate which can separate light into linearly and circularly polarized light. The thickness of the crystal determines the amount of physical displacement. The orientation of the crystal determines if the crystal displaces right, left, up or down.

The thickness of the crystal determines the amount of physical displacement which you then have to relate to the pixel spacing, or pixel pitch, of the sensor. The thicker the OLPF, the more the displacement. If the pixel pitch is 10 microns and the OLPF blur is 20 microns, then the amount of blur at a pixel level would be the same for a sensor pixel pitch of 5 microns and OLPF blur of 10 microns. Since camera manufacturers tend to use the same lithium niobate for the OLPF, we can measure the amount of blur by the thickness of the OLPF rather than measuring it directly in this example.

As camera sensors have increased the pixel density, the typical OLPF has gotten thinner. Manufacturers are using the same amount of blur at a pixel level but less blur at a physical displacement level.

The OLPF is one cause of blur that is purposely added to the camera to reduce moire, or digital sampling errors, when the frequency of the input signal approaches the resolution of the digital sampling device which is the sensor in this case (Nyquist Theorem). The important thing to keep in mind is that this sampling problem occurs when you have a input signal that is repeating at or near the frequency of the sensor. If you don't have the repeating signal, then you don't get the moire. For a camera sensor, a repeating signal might be the weave of a certain fabric or a screen window. If there is a problem with the repeating signal, as soon as you move closer in or further away, the spacing changes and the moire disappears. Curiously, if the manufacturers wanted to completely eliminate moire, they would have to blur the signal at by a minimum of 3x3 or 9 pixels because of the R-G-G-B color filter array. In this study, the Canon 5D II has a 2x2 or 4 pixel blur filter. Our guess is that the manufacturer didn't want to blur the image quite that much because of the loss of resolution, so they picked a midpoint to stop some moire and not sacrifice too much resolution. Because of their choice for a 2x2 matrix, you can see color moire patterns on a 5 D MK II though you won't see it for a monochrome image.

The manufacturers know that most resolution tests are done with black and white test charts where all the pixels are either on or off. For a black and white test chart, a 2x2 blur pattern is sufficient because all pixels are on or off. But shoot that same test chart under a pure red, blue or green light, and you will see resolution drop off dramatically and much more apparent moire issues. In real life, cameras are typically shooting a color scene.

For a color digital sensor, resolution drops with color content. If you shoot a scene illuminated with a blue LED light as the sole source of illumination, and only 25% of the pixels can see anything because only 1 in 4 pixels is blue. This is why our monochrome camera conversions will always have superior resolution in any picture that contains color.

Below is an example of a stock Canon 30D with the CFA and OLPF on the top and a monochrome 30D with no OLPF on the bottom. You can see that the blue and red resolution color camera are the worst, which is what we would expect. The green is sharper than the blue and red because 50% of the pixels are green. Monochrome is sharper than color because all pixels can see every color. The monochrome B&W image on the far right is sharper than the CFA camera because of the OLPF.

Blur can occur for many other reasons, but two big ones are lens diffraction at small apertures and lens airy disk diffraction at wide apertures. Small aperture lens diffraction increases as aperture gets smaller (higher F-stop). A perfect lens also has an airy disk which is another sort of diffraction. The airy disk is related to the frequency of light with smaller wavelengths having smaller circles. When the pixels get small enough, and if your lens is good enough, the sensor sees the airy disks as donuts, or concentric circles. There are many factors to consider, but smaller than about 4.5 micron pixel spacing, and you hit the limit of sensor resolution for visible light from the lens airy disk diffraction. With many current camera sensors already at 4.5 microns or smaller, the airy disk is something you need to strongly consider because adding pixels won't give you more resolution. If your lens isn't good enough, it won't be able to produce an airy disc on the sensor. Either way, you hit physical limits on resolution when you are constrained by the sensor size. If you move to a larger sensor, you can increase possible resolution. In our opinion, this is why the small point and shoot cameras that have high megapixel counts aren't increasing resolution - just file size. The point and shoot lens isn't good enough, the optics moves on plastic parts, and the pixels are too small making the whole system limited.

Most consumer DSLR cameras have an OLPF. Nikon recently made news when they announced they would sell the Nikon D800 without an OLPF as a D800e. Nikon isn't exactly selling a camera without an OLPF, though. To make manufacturing easier, they have one OLPF layer displacing light one directlon, and then another displacing the light in the inverse so that the light ends up back where it started. It was easier for them to do this rather than make a completely different sensor.

However, our guess is that Nikon customers who try to test the difference between the Nikon D800 and D800e will be hard pressed. With a pixel pitch of 4.88 microns, the sensor is near the airy disk limit. The 36.3 megapixel sensor is probably beyond the limit of many lenses. However, the fact that Nikon has chosen to sell a more expensive version D800e without the OLPF lends credence to our long standing opinion that the OLPF is, in general, an unwanted thing for most camera uses. No medium format back camera has one because those customers wouldn't buy it. We suspect that the use of the OLPF in consumer DLSR's is more of a marketing concern where they don't want someone posting a picture of a test chart online showing how a camera without an OLPF could exhibit moire. In most real life photographs, moire isn't an issue.

When we perform an HR conversion, we remove the OLPF and ICF stack and replace it with a new ICF-Only. This gives the camera better resolution capability. Of course, you also need a good enough lens to see the results and everything else in the system (camera, lighting, lens, technique, target, etc) need to be operating correctly. When one takes a picture, the camera needs to start with the correct optical information. By the time the user sees something on their computer screen, the optical data has been transformed in many ways such as adjustments for dark level, dead pixels, hot pixels, de-noising, sharpening, white balance, debayering and many more steps. However, in our opinion, you always want to start with a clean optical signal.