Debayer Study

To see the monochrome models currently available, you can go our store section by clicking here.

In 2007, we started building machinery to convert a color sensor to a monochrome sensor (or more exactly panchromatic). By 2009, we had our first successful conversion and have developed a lot of experience since then. We our on our 3rd generation of processing equipment and have excellent, repeatable, edge-to-edge results on selected sensors.

Why use a monochrome camera? Because an equivalent monochrome camera will always take a much sharper image than a color camera because resolution is dependent on the color content in the picture. With a monochrome camera, you lose color resolution and gain spatial resolution. Converting a color sensor to monochrome is a technically challenging process. After taking the camera apart to remove the image sensor, we have to remove the ICF/AA stack, sensor coverglass and then about 5 microns of the microlenses and Color Filter Array (CFA) that have been photolithography printed on the surface of the sensor in epoxy.

To understand why a monochrome sensor will have higher resolution that a color sensor, you have to understand how a color camera sensor works. We have a rather technical explanation for those so inclined. For the artistic types that aren't interested in the science, please just consider the two pictures below.

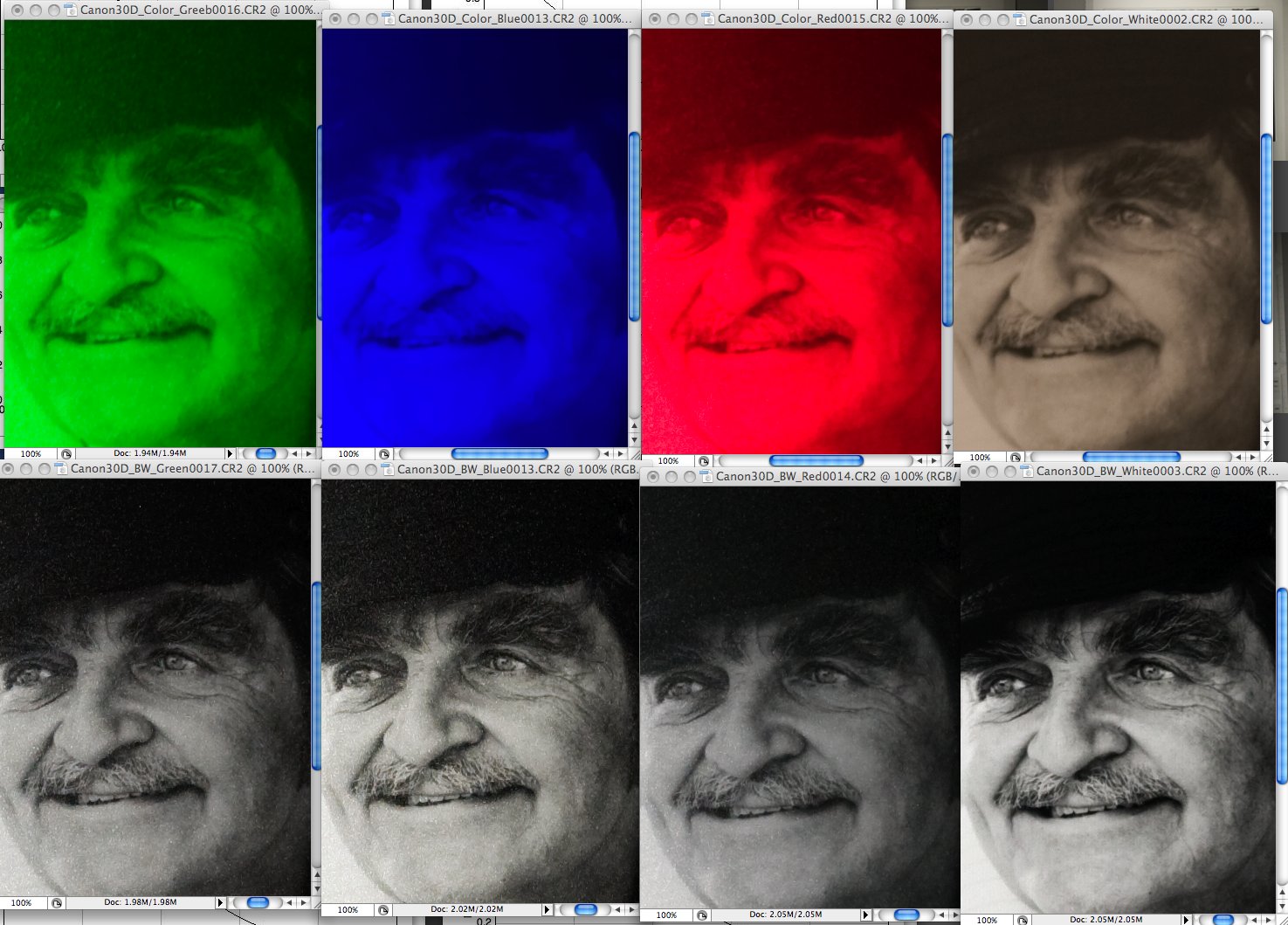

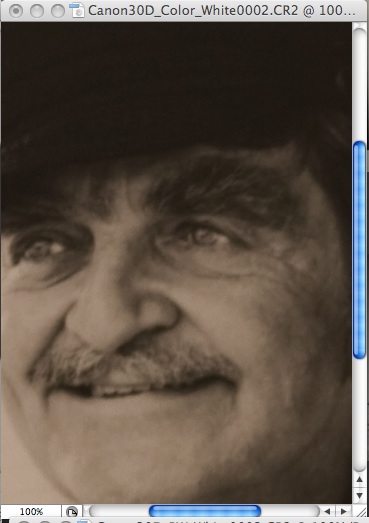

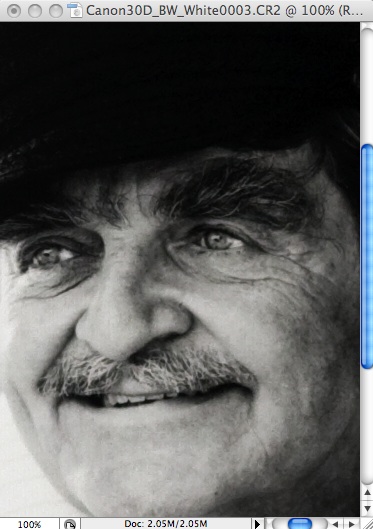

Stock Canon 30D taking a black and white picture Our modified Canon 30D with Color Filter Array (CFA) removed

Which picture looks better to you? These pictures are an area of a larger picture zoomed to 100% in Photoshop..

Some history. Kodak made a series of monochrome DSLR cameras starting with the DCS-420m (1.2 megapixel), DCS-460m (6 megapixel) and lastly the DCS-760m (improved 6 megapixel). The DCS-760M sold for $10,000.00 in 2001. Unfortunately, the market for B/W DSLR cameras is quite small and Kodak discontinued the line. Today, Kodak has discontinued all DLSR cameras and the company is a small fraction of the size it once was. Some medium format monochrome data backs are available, and they are quite expensive.

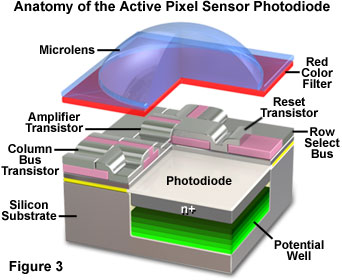

A camera sensor is composed on many different layers. From the top, the first layers are:

- Microlens for focusing light on the particular pixel. There is one microlens for each pixel

- Color Filter Array (CFA). A pattern of red, green and blue dots is photolithography printed across the surface. Each pixel will be only one of three colors - red, green or blue. The Color Filter Array (CFA) is usually a Red, Green, Blue, Green pattern in a Bayer pattern. A 10 megapixel camera will have 5 million green pixels, 2.5 million red pixels and 2.5 million blue pixels.

- Black and White Photodiode. Under the color filter is a black and white device that sees light. A 10 megapixel camera will have 10 million black and white pixels.

The picture above shows the structure of a red pixel. A green pixel will have a green color filter, and blue pixel will have a blue color filter.

Pixels are arranged usually in a Bayer Red-Green-Blue-Green pattern Green is used twice as much as red and blue in an effort to more closely mimic the human eye which sees green better than red or blue. Humans see green the best of any color.

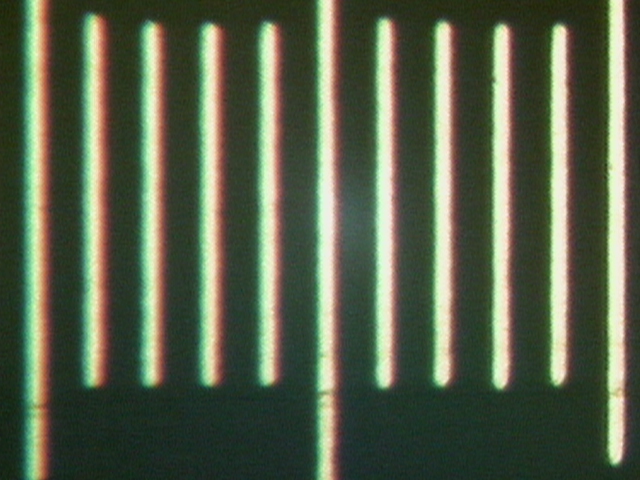

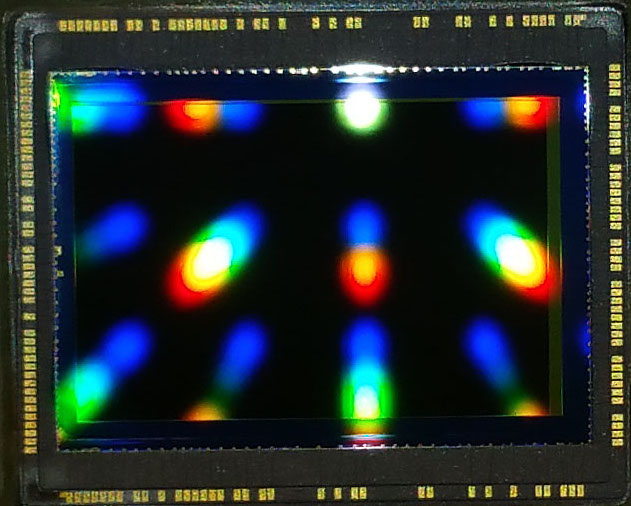

Canon 450D image sensor showing the CFA

For size comparison, the lines above are 0.01mm apart

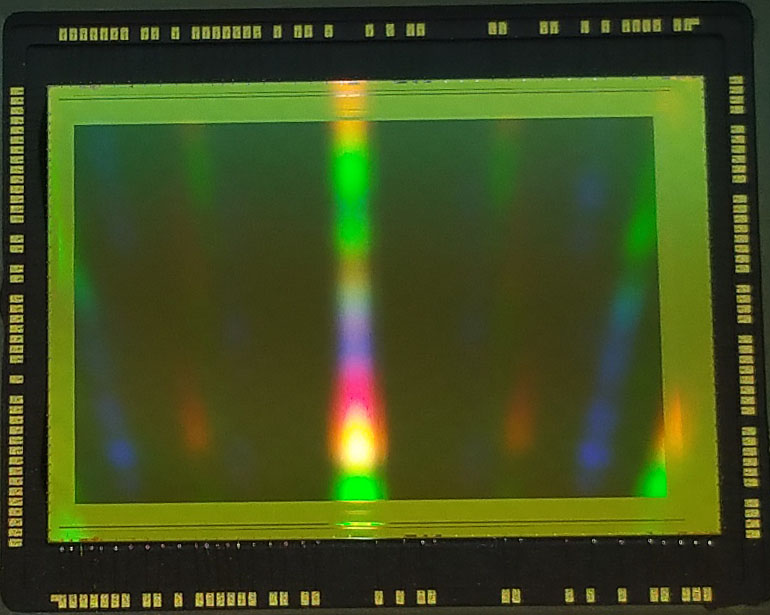

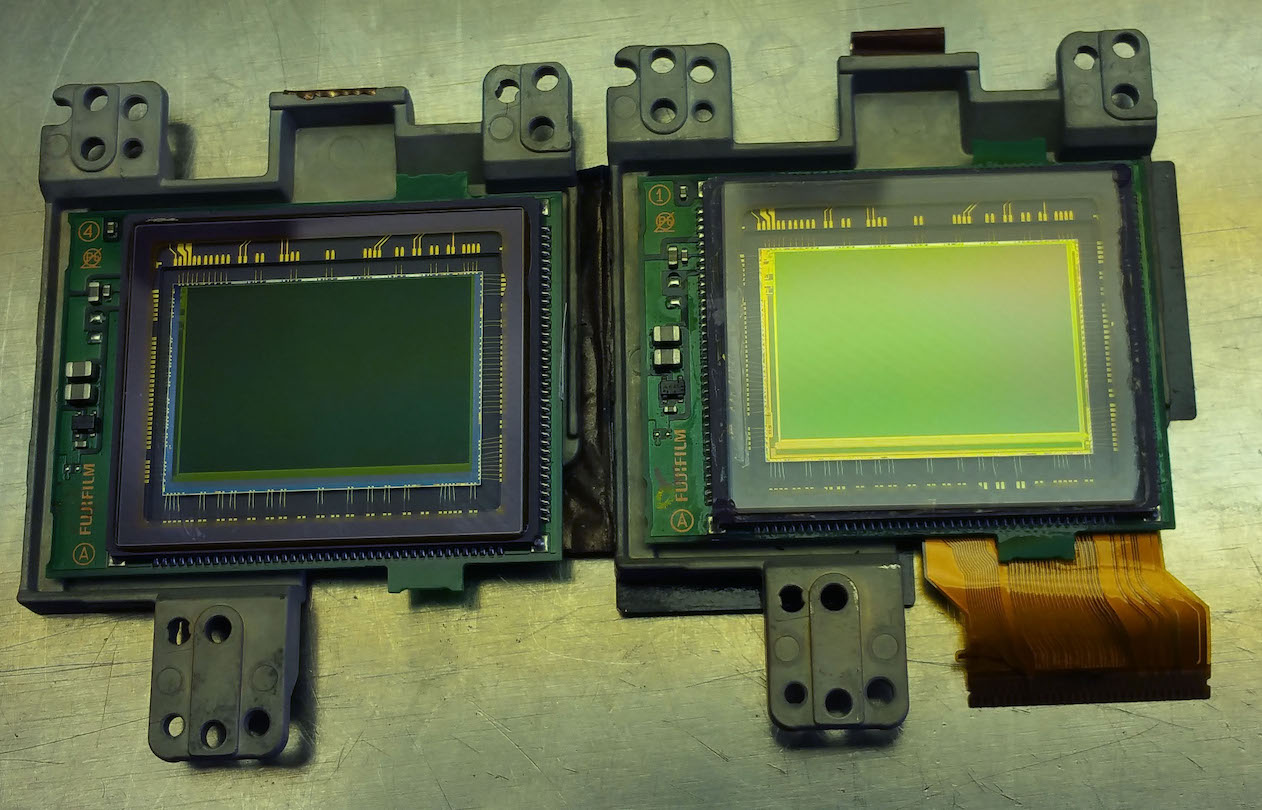

Canon 7D MK II stock and after converting it to monochrome

Fuji X-Pro1 stock and after converting to monochrome

With most camera resolution reviews, tests are performed with a black and white test target under white light. A black and white target will show the maximum resolution of which a color camera is capable. This is because the black and white target only has luminance (brightness) data. The black and white target has no chrominance (color) data. Why is this important? Remember, for every four pixels on a color sensor, you get 2 green, 1 red and 1 blue. So suppose the target as illuminated with a blue light? You would only get 1/4 of the pixels possibly seeing the blue light. Effectively, your 10 megapixel just turned into a 2.5 megapixel camera.

The color content of the picture will change a color camera's resolution. This is why a red rose sometimes looks blurry in comparison to other parts of a picture. The red rose is only triggering 1/4 of the pixels.

To test our theory, we performed the following test.

- We used a variety of resolution test targets in a room with controlled lighting.

- We started with two Canon 30D cameras. We converted one to monochrome by removing the AA, microlenses and CFA. This is much easier said than done.

- White light and pure red, green and blue light were used to illuminate the targets.

- All pictures taken in RAW.

- Both cameras were set to ASA 200, mirror lockup, timed shutter release on a tripod.

- Results were analyzed in ImageJ to measure the sharpness (Modulation Transfer Function or MTF)

- Results were also viewed in Photoshop

Results.

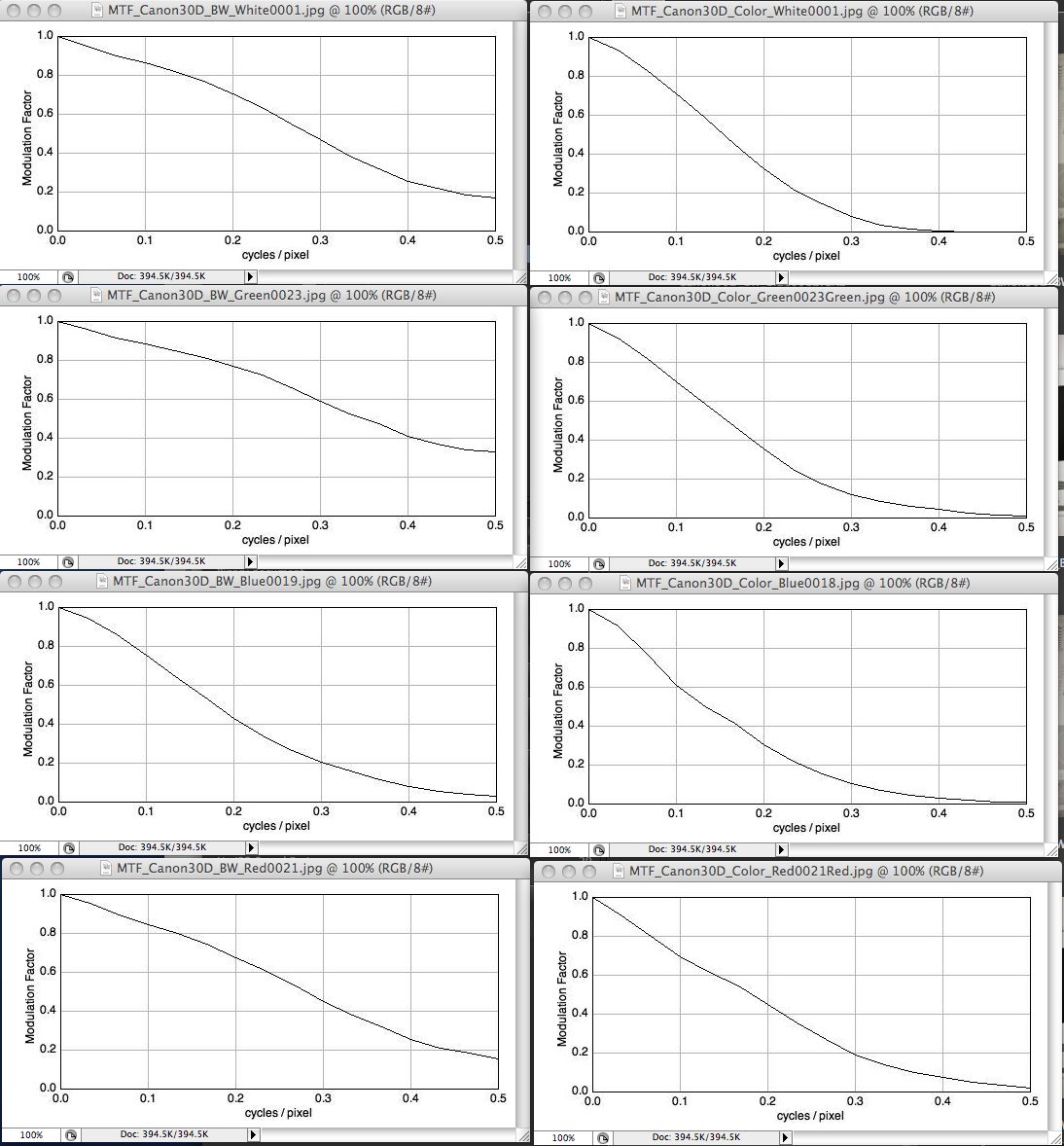

First we will show the MTF of the two cameras. The monochrome camera is the left column. For those who would like to understand more about MTF, Luminous Landscapes has a good discussion here. Basically, MTF is a mathematical way to quantify lens and camera sharpness. One can easily look at a picture and see that one is sharper than the other, but the question is "How much"? MTF allows us to put numbers to what we can see.

The further the MTF goes to the right and the higher it is, the sharper the picture. There are four MTF graphs for each camera. The first shows white light sharpness. Next is green, then blue and lastly red. The blue light was the weakest light, so the blue MTF showed the worst performance from both cameras. You can see that the B/W camera has much higher performance. At 0.2 cycles/pixel, the monochrome camera has twice the sharpness and at 0.30 cycles, has 5x the sharpness.

You can see that in every case, the monochrome camera substantially outperforms the color, stock version.

Real life performance. The titles of the different pictures will tell you about the particular test. First, we show the color camera taking the same picture under green, blue, red and white light. Note that the green picture has the highest resolution because there are twice as many green pixels compared to red or blue. Notice that the blue picture shows the highest noise which may relate to the blue light being dimmest and/or the response of the sensor to blue light. Notice the difference between the color and monochrome cameras.

You can click on the pictures to download a TIFF version.

At 50% in Photoshop

At 100% in Photoshop

Q.E.D.

That's why a monochrome sensor has an advantage over a color camera when taking black and white pictures.